The Pantheon Blog

Brandfolder Image

Latest insights

Log Forwarding in Beta: Delivering Actionable Insights for Customers

Read MoreThe Web Is Dynamic: Next.js on Pantheon Enters Private Beta

Read MoreUncomplicate Decoupled: Learn About Next.js on Pantheon

Read MoreSystems and Stories: How Developers and Marketers Can Work in Sync

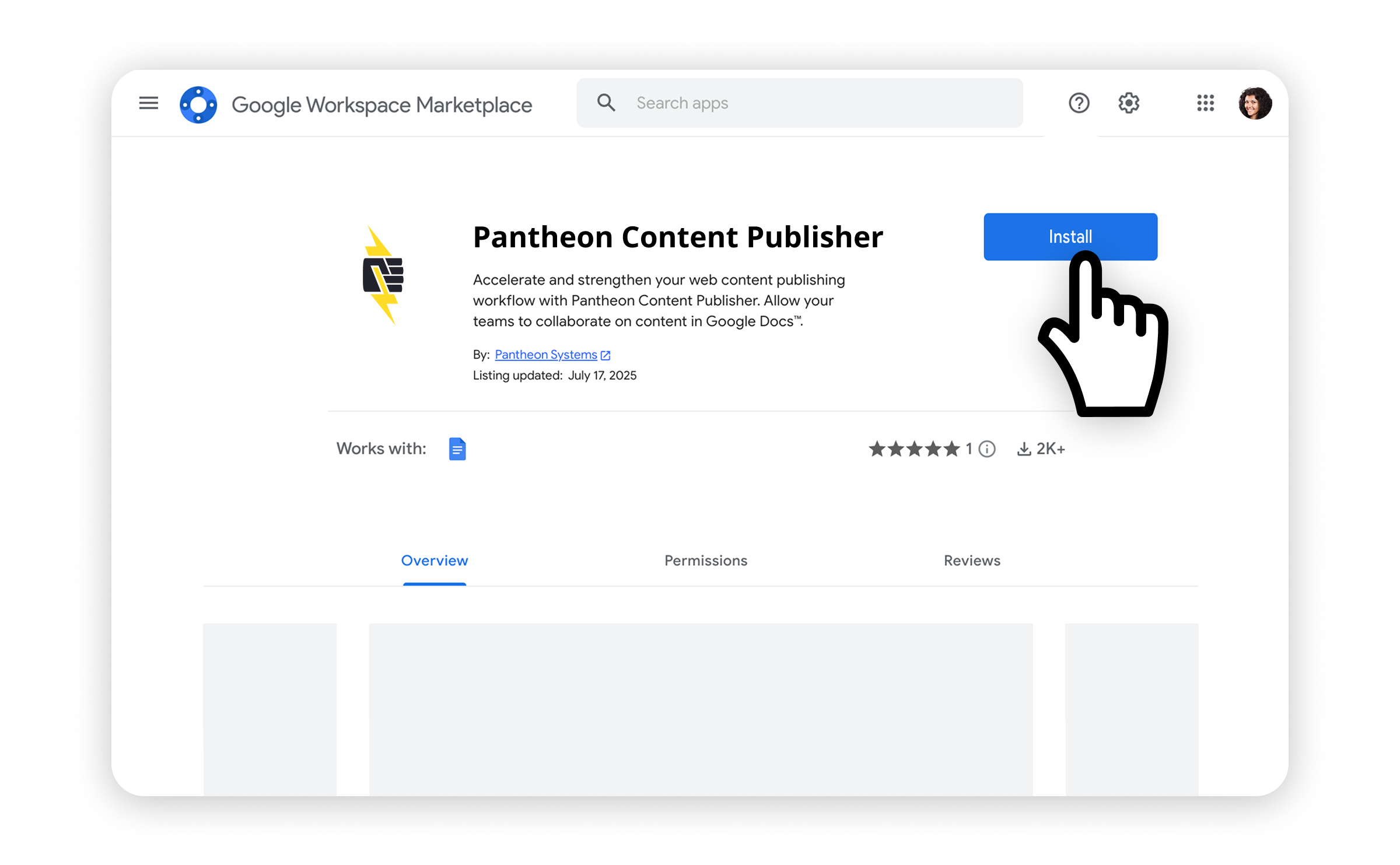

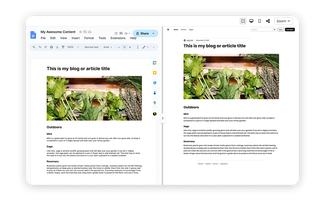

Read MoreHow to Implement Content Publisher on a New or Existing Website

Read MoreYes, CEOs care deeply about websites

Read MoreFix Your Content Workflow: Content Publisher Now in Public Preview

Read MoreWhy we’re deploying a new PHP runtime

Read MorePantheon Joins Drupal AI Initiative

Read MoreFrom ChatGPT to AI Overviews: How Enterprises Win in Multiplatform AI Search

Read MoreWhy Anderson Cooper Should Use Content Publisher

Read MoreWhere do you really manage your content?

Read More