What Computer Assembles the Website?

Image

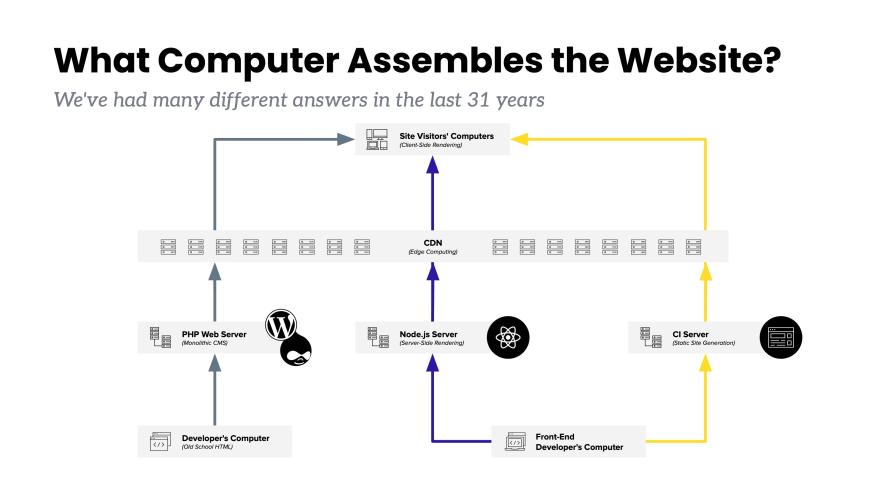

An alphabet soup of website rendering initialisms flooded the last decade of website operations. Even the seemingly simple ones like SSG and SSR (Static Site Generation and Server-Side Rendering) obfuscate that in practice they often mix in a fair amount of Client-Side Rendering too.

Throw on the more framework-specific, or platform-provided techniques like ISR, DPR, and as of a few weeks ago, RSG, and it's a wonder that anyone gets any work done at all amidst the noise. As I wrote in the previous blog post in this series, at Pantheon, we take on the challenge to find and solidify the state of the art and to avoid the bleeding and band-aids found on the bleeding edge of technology.

The best way I have found to cut through the chaos of these initialisms (they're not acronyms) is to ask the question in a different way: What computer assembles the website?

Image

As you can see in this (believe it or not) simplified diagram, there are a lot of computers between the laptops of developers writing code to make websites and the varied devices used to browse websites. Servers, Clouds, CDNs and more can each play a role in bringing the site together. To ground this question in more human terms: Which one of these computers brings together the work of the web team?

The Human Perspective

Throughout the history of the web, we can ask: On what computer (often controlled by an IT department) do the templates (often made in collaboration between designers and developers) meet the content (written by editors, marketers, and communications professionals)?

I think it is worthwhile to trace a history of the different ways that different teams have answered this question across three decades of deploying websites on the world's web. I'll expand on it more in the conclusion of this blog post, but in short, the historical context is a reminder that none of these initialisms (SSG, SSR, ISR, etc) is right or wrong in an absolute sense. They each rose in prominence at a specific time (or multiple times!) for a variety of reasons that made sense within their contexts. Zooming out to the level of decades, I find it easier to appreciate the benefits that each method contributed to the Open Web; and I find it easier to conclude that some of these strategies no longer make sense for our customers going forward in the 2020s.

With Old-School HTML, the Developer's Brain does the Heavy Lifting

The creators of early websites often blended the distinct roles I mentioned above. From their desktop computers, webmasters configured web servers and wrote the HTML of the websites.

For many early, low traffic websites, it was acceptable to literally run your web server on a personal computer in your home or office. The "computer" combining templates and content was the brain of that webmaster as they hand-coded HTML. It was a simpler time.

Specializing, Even in a Monolithic CMS

That simpler time did not last long. Quickly, patterns emerged for running programming languages on a server that could process templates fed with content pulled from a database.

The version of this model near and dear to my heart is of course the LAMP Stack. With Linux, Apache, MySQL and PHP, web teams could run a complex website with no licensing costs. CMSes like Drupal (released in 2001) and WordPress (released in 2003) handled user login, forms, templating and so many other hard questions of web development.

In this era, the three distinct roles mentioned above (IT, developers, marketers) had a relatively clean understanding of how their work fit together.

Content editors signed into the live website and could instantly change content there.

Developers worked on code changes on some other (non-live) environment and deployed to Live when ready.

IT controlled all the servers and computers involved.

I don't want to look back with too-rosy glasses. This model had its drawbacks. Pantheon exists as a company because executing on the LAMP Stack model really well at scale was hard. But when it worked, it worked.

The World Turned Upside Down

And then Steve Jobs walked on stage in 2007 to announce the iPhone and turn everything upside down.

And I stayed in denial about how big of a change the iPhone represented for a few years. At first I thought, something like "OK, the screen is smaller. Interesting. Drupal has the concept of child themes. We can just override some CSS, maybe change some markup, to simplify how example.com displays. And let's put it on mobile.example.com or m.example.com. Problem solved. And most traffic will still happen on real computers.

How shortsighted.

By the time the iPad came around though it was becoming increasingly clear that the end device, running iOS or Android, was often the most powerful computer in the whole stack. These were powerful computers. They had cameras, touchscreens and accelerometers. They had a booming native application ecosystem that could easily leverage all those hardware features. The challenge was way bigger than Responsive Design. It was a fight for whether the Open Web would remain competitive on the devices people carried everywhere in their pockets.

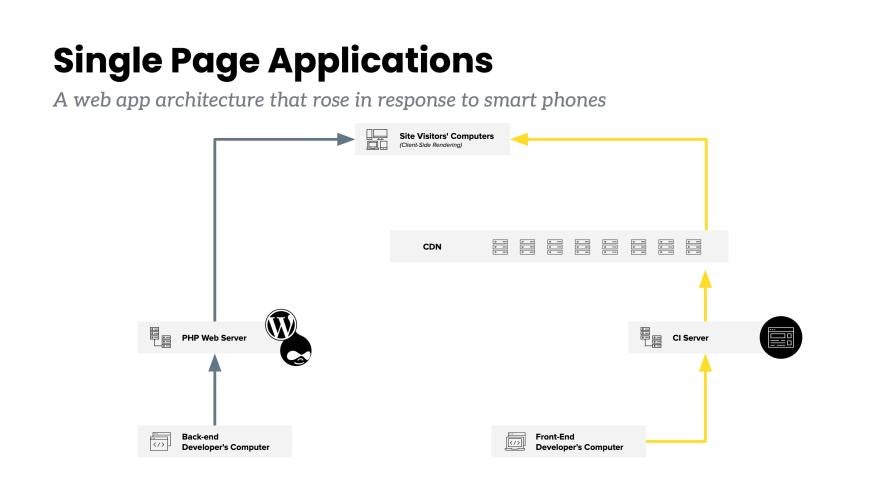

Image

Many of the people who appreciated the scope of the challenge gravitated toward a model dubbed "Single Page Applications." These SPAs made the end-user device (the iPhone, iPad, laptop, etc) be the device that assembled the website. In this model, the initial payload sent to the browser would be mostly JavaScript which would then load data from a place like WordPress or Drupal. In this model, the CMS is relegated to an API provider.

The SPA model enabled rapid iteration on the front end. Developers could explore how best to leverage touch interfaces, different screen sizes and other complexities without having to work through the many layers of the CMS's theme.

Static - Again

But, wow, if you did the SPA architecture poorly, it could get really bad. SPAs led to performance nightmares, accidental security leaks and maybe totally breaking the meaning of URLs.

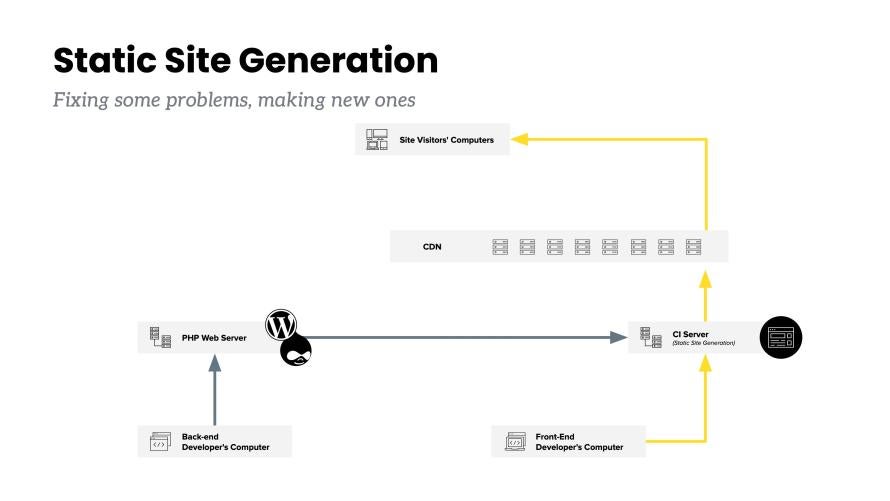

Image

The downsides of SPAs drove a renewed interest in an old solution with a different answer to the question: "What computer assembles the website?" The next blog post in this series will dive deeper into DevOps but here I should mention that the rise of DevOps culture increased the prominence of the "CI Server."

Developers of all stripes increasingly had the expectation that every git commit or git push would trigger a Continuous Integration build process that resulted in a fully functioning artifact. With CI Servers and CDNs swirling in the zeitgeist next to a reluctance to rely on Client-Side Rendering all the time, the old answer of "Static Sites" became fashionable again. Like the hand-coded HTML of the 90s, of the static output of certain CMSes in the 2000s, SSG (Static Site Generation) skyrocketed in the 2010s.

Front-End Developers (FEDs) often loved this mode because it got them in the DevOps door (that otherwise wasn't terribly welcoming to FEDs). And not to mention that when done well, this model worked really well! Pantheon's own documentation site switched to SSG in this era and still fits the model well.

IT directors were often easy to sway to this model because, under certain conditions, this model could be extremely secure and extremely inexpensive.

Huh, I had a lot of wiggle words italicized in that last sentence. What did content editors think?

Dynamic - Again

Content editors tended to not like the idea that any content change they made would trigger a Continuous Integration process that could take a lot of minutes. And yes, there are ways of reducing those minutes, and yes, maybe you can get it down to seconds, but back in that LAMP Stack model content editors could see their changes in less than a second.

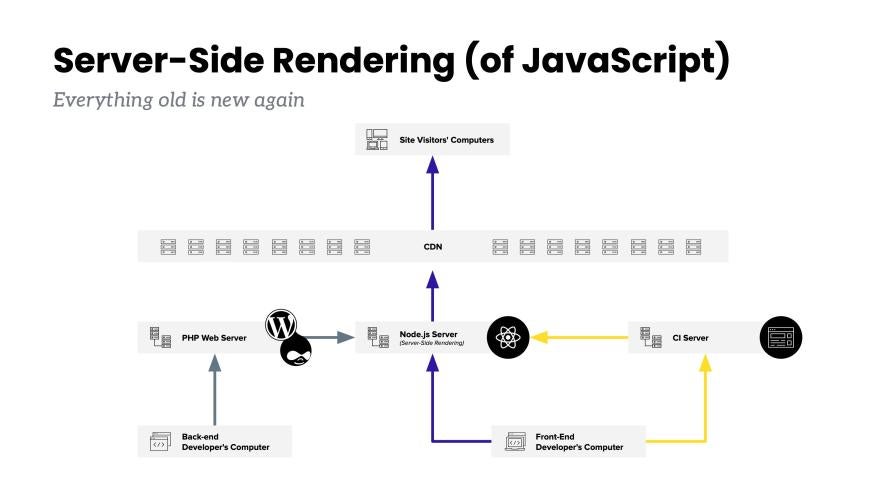

Image

So here we are in the 2020s with more and more teams rediscovering the benefits of having a dynamic server (now it's probably a Node.js server) just generate a new HTML page when a visitor needs the page and the cache doesn't have a copy. End of story.

The Bleeding Edge

Wait, this story never really ends. And I didn't explain that "cache" part. Many of these diagrams have a "CDN" layer that I've barely mentioned. The Content Delivery Network (CDN) is unsurprisingly a network of computers spread around the global, that sits in-front of these other computers/servers so that when anyone anywhere in the world requests a web page, they're getting it from close by.

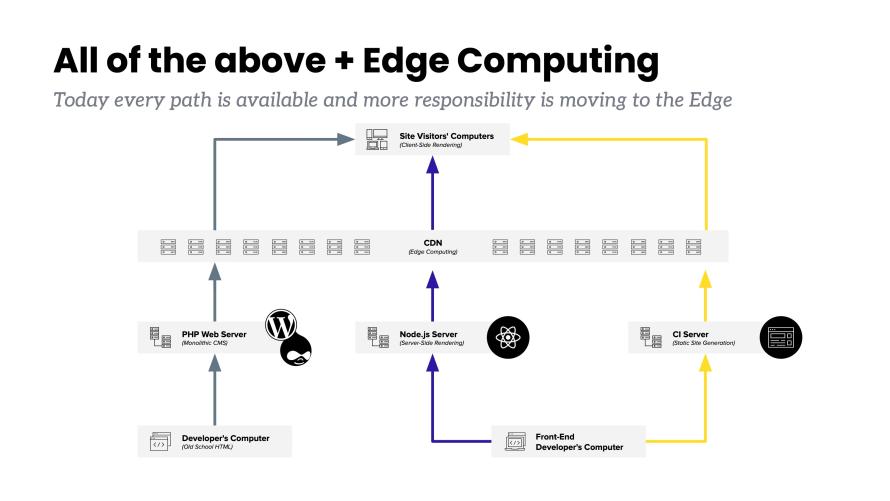

Image

For that to work, there are conventions (mostly HTTP headers) used between the CDN and the servers behind it for determining how long a version of any given web page can be held and cached by the CDN.

And because caching is one of the two hard problems in computer science (along with a naming things and off-by-one-errors) for much of the history here where the CDN box has appeared, it has been advantageous to keep the CDN layer as dumb or as simple as possible. The CDN can make the whole system faster or more resilient but it's not the answer to the question "What computer assembles the website?"

Well, it's becoming the answer. Whether it's the Edge Side Includes our co-founder David Strauss has talked about for more than a decade, image optimization, WebAssembly or other small runtimes, there is a lot of energy right now pointed at moving more and more responsibility to the edge.

With our partnership with Fastly we can keep close tabs on the cutting edge. Our Advanced Global CDN product enables us to test the waters with individual customers for the newest available features.

But for the vast majority of our customers, the value of Pantheon comes from guardrails. While the puck is definitely moving toward the CDN, we do not yet know how quickly or how much of the puck will be there.

So What?

I find it helpful to trace this sort of history as a reminder for the present when I hear or see people making bold proclamations about web architectures framed in absolute right or wrong. That tone of righteousness could be found at any point in this history. And at every point, there was a sweeping set of pressures just around the corner coming to knock over any architecture billed as the one true answer for every site.

That being said, this high level overview gives me increased confidence in the path Pantheon is taking with Front-End Sites. Front-End Sites support both SSG and SSR with Node.js. Though, in our present phase of Early Access, we are seeing plenty of hints that while SSG has some strong adoption among our community who jumped aboard at SSG's peak, most of our community will likely find more success with the Server-Side Rendering model that is closer to what WordPress and Drupal have done for 20 years. That SSR model works best when we add in fine-grained CMS-to-CDN cache control connections. As CDN-based architectures mature for other concerns, we will fold those in too.

At Pantheon we say "website operations is a team sport" and we will encourage and support the models that are most likely to bring the most success to the most professional teams. Ask for access to Front-End Sites now to evaluate if you think it is the best answer for your team now.