The Pantheon Blog

Brandfolder Image

Latest insights

Navigating the Noise: A Look at Bot Traffic on Pantheon

Read MoreAll's FAIR: Why WordPress needs decentralization

Read MorePantheon protects you from the latest WordPress security exploits

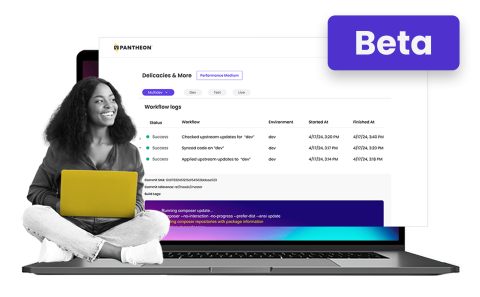

Read MoreModernizing Pantheon's Site Dashboard

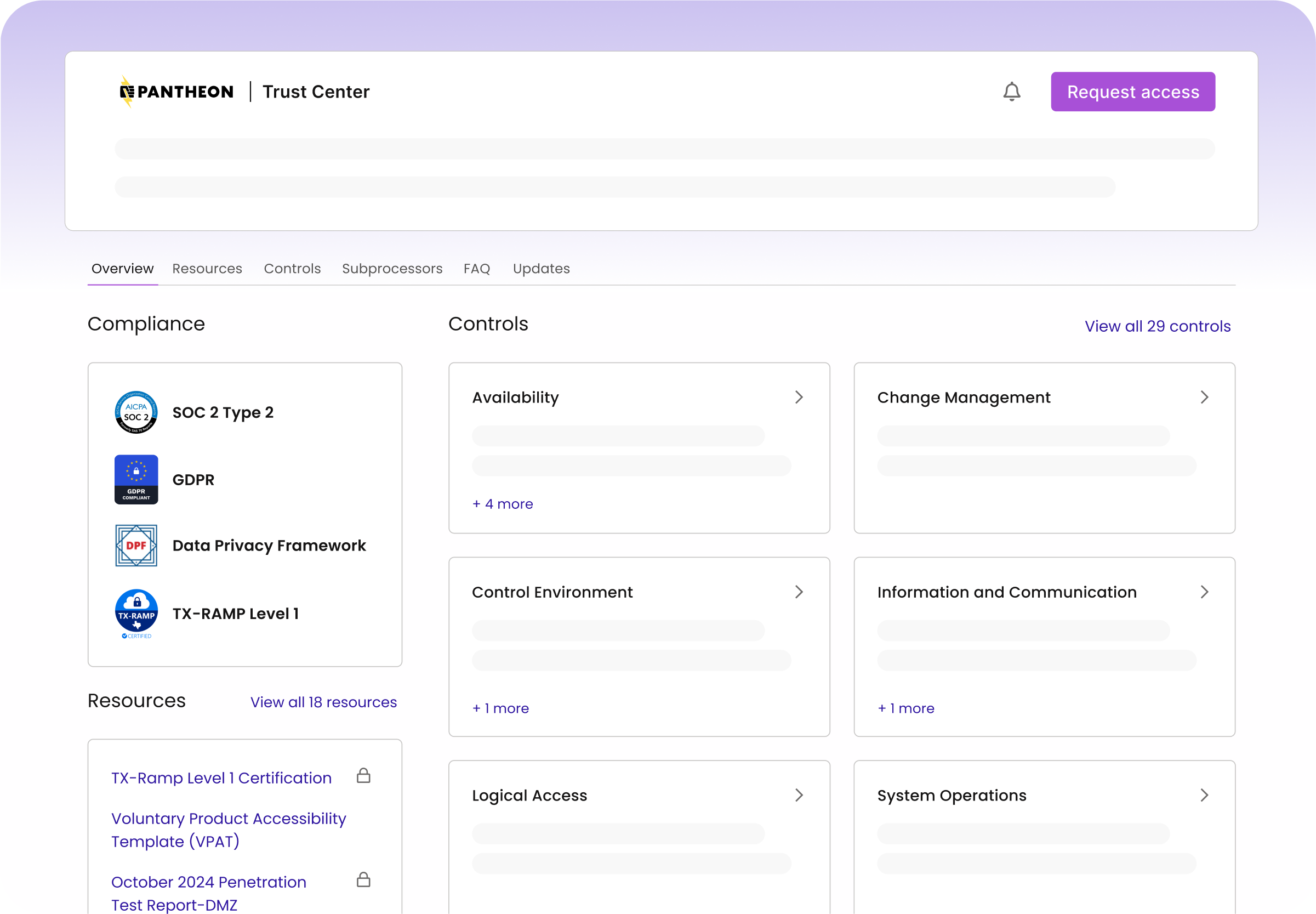

Read MoreIntroducing Pantheon’s Trust Center: Transparency Meets Performance

Read MoreWhy We Created a "Push to Pantheon" GitHub Action

Read MoreEnhanced Drupal Integration for Content Publisher

Read MoreA WordPresser goes to DrupalCon Atlanta 2025

Read MoreAnnouncing 2025 Pantheon Partner Summit Award Winners

Read MoreA New Era for Customer Experience: Introducing Pantheon’s CCO

Read MoreConnecting Enrollment and Web Teams for Student Success

Read MoreWhat's Cooking at Pantheon's DrupalCon Booth 2025

Read More