The Strategic Advantages

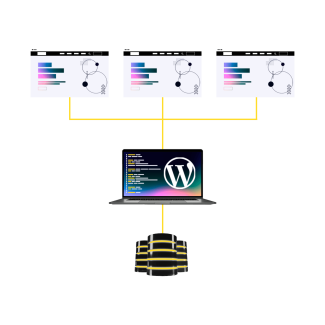

Multisite transforms how you manage multiple WordPress sites. Instead of juggling separate installations, you control everything from one dashboard. This centralized approach delivers real efficiency gains:

Resource Efficiency: Share WordPress core files, themes, and plugins across all sites. One codebase serves your entire network, dramatically reducing server overhead and hosting costs. For organizations managing 10+ sites, the savings are substantial.

Instant Scalability: Need a new site? Create it in minutes, not hours. No server setup, no WordPress installation, no theme configuration. Your network grows as fast as your business demands.

Understanding the Trade-offs

Every powerful tool has limitations. Multisite's shared architecture creates both opportunities and challenges:

Shared Database Considerations: All sites share one database, enabling seamless user management across your network. Staff can access multiple sites with single sign-on. But this connectivity means security requires extra attention—a breach in one site could potentially affect others. The solution? Implement robust security protocols and regular monitoring.

Code Management Reality: Themes and plugins install at the network level. This streamlines updates (patch once, deploy everywhere) but requires careful vetting. One problematic plugin affects every site. Smart Multisite administrators maintain strict plugin approval processes.

Infrastructure Planning: Your sites share server resources. A traffic spike on one site impacts the entire network. Plan capacity for your busiest periods, not average loads. Quality hosting becomes non-negotiable.

Read about WordPress Hosting, Wordpress for the Enterprise, and how to make the most of the WordPress Gutenberg editor. The Strategic Advantages

WordPress Multisite vs. Multiple Single WordPress Installations

| Criterion to consider | Multisite | Single Installations |

|---|---|---|

| Database shared between sites | Yes | No |

| Codebase shared between sites | Yes | No |

| Server resources shared between sites | Yes | Yes or No* |

| User accounts shared between sites | Yes | No |

| Risk of all sites going down if there are issues | Yes | No |

| Plugins and themes need must be updated per-site | No | Yes |