How Pantheon Protects Your Site from Software Supply Chain Risks in Open Source

Image

Leveraging open source in software development is now the industry norm, including virtually every website project. The advantages of building on top of an open-source CMS significantly outweigh doing anything from scratch. Open source also wins when comparing the costs, risks, and limitations of being boxed in with a proprietary SaaS vendor. But there’s no such thing as a free lunch. Open source comes with long-term maintenance responsibilities. Beyond just “keeping up” with patches and updates, enterprise organizations have long known to manage their software supply chains holistically.

Image

It’s worth noting that software supply chain risk — where an unexpected, unwanted, or even malicious change is introduced upstream — isn’t unique to open source. While the infamous npm-left-pad incident introduced many organizations to the concept, the most severe supply chain compromise on record is the SolarWinds hack, which impacted the Microsoft Windows ecosystem.

Regardless of their stack, professional web teams should take these matters seriously, both for the core CMS and contributed modules and plugins. Of course, their custom code, especially if an outside agency or contractor develops it, presents challenges too. In this post, I will highlight some of Pantheon’s security capabilities, which help our customers mitigate supply chain risk by balancing stability and speed.

In addition to inspecting where and how you source your third-party code, once that code is downloaded there are still practices Pantheon encourages or enforces to prevent broken or malicious code from reaching a live website:

- Role-based access control limits who is allowed to make deployments to one site or a selection of sites.

- Locked-down file permissions in our Live (and Test) environments close off one of the most worrisome attack vectors in the WordPress ecosystem.

- A Git-backed deployment pipeline makes code changes auditable.

- Autopilot uses Visual Regression Testing to identify the breakages caused by third-party code updates, especially in plugins.

- Upstreams provide a checkpoint before core updates go out to individual sites.

- Composer clearly separates out first-party code from third-party code.

Multiple mechanisms for updating plugins

While the SVN-based WordPress.org repository is the most commonly used mechanism for distributing plugins in the WordPress community, plugin development tends to happen on more modern platforms like GitHub. Having WordPress.org in the supply chain between plugin developers and individual sites provides some benefits like community review and ratings.

However, some teams may want to get their plugins straight from the source. Our documentation describes how to:

- Get updates directly from a Git repository using Git Updater.

- Use WP-CLI to install plugins that use their own update infrastructure like ACF.

- Download plugins from a mix of sources using Composer.

While inspecting the provenance of your plugins can mitigate left-pad-like risk, most teams should start their analysis closer to home. Who can deploy changes to the live site?

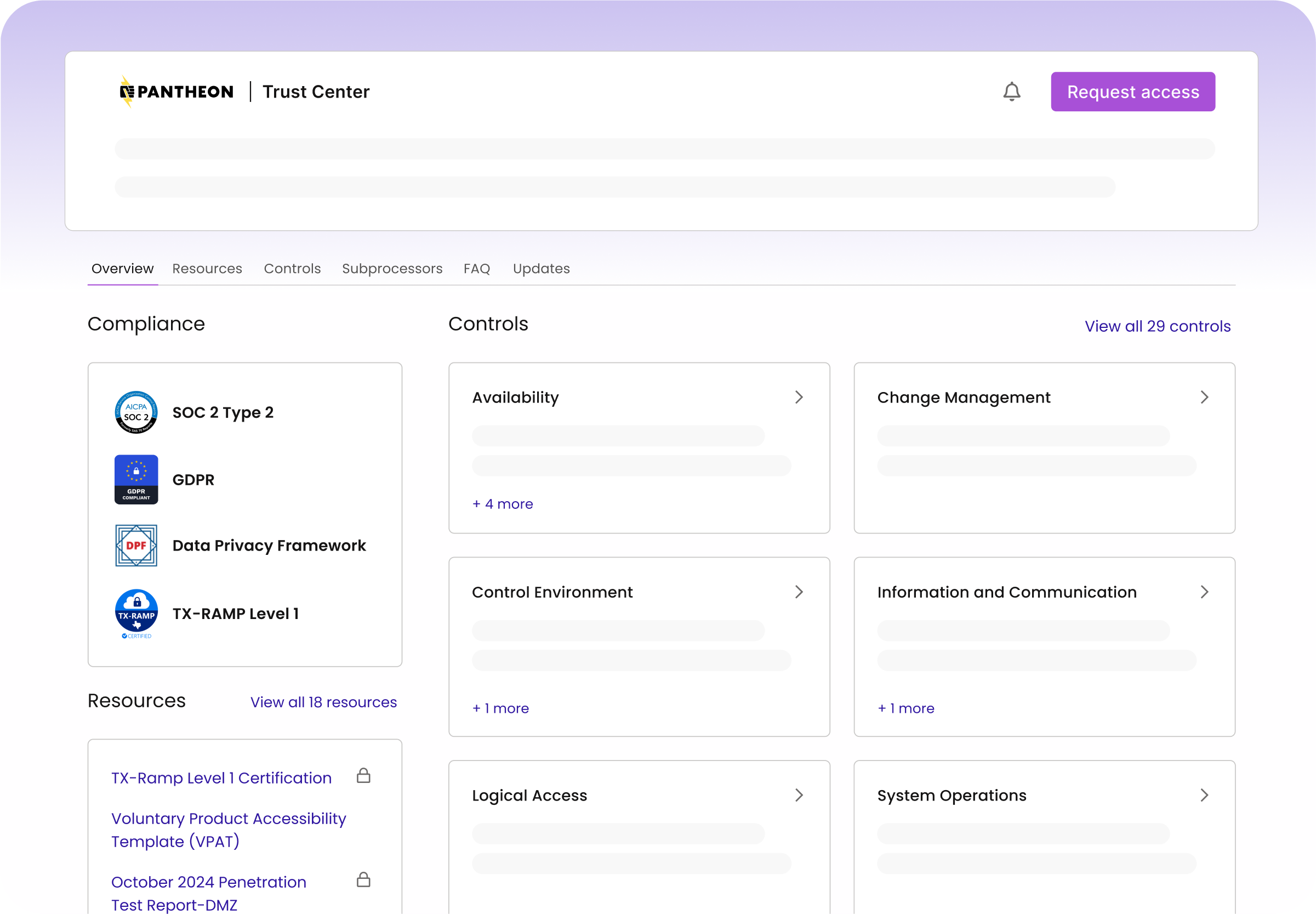

Permissions: Who is allowed to update what?

One team's safety standards are another team's burdensome red tape. Those differences are why Pantheon supplies a Role-Based Access Control model that allows administrators to grant some developers the ability to work on code in a Dev environment but not deploy them to a Live environment.

Image

Similarly, some of our largest customers like universities separate out the team working on a commonly used codebase containing shared elements like SSO plugins and a parent theme from the teams implementing individual sites.

Pantheon allows for a great deal of variability in how teams are structured. Our concept of a "supporting workspace" is unique. It allows large organizations to grant access to individual web agencies on a site-by-site basis. Still, we have a hard rule against changing code in production environments.

A Read-only filesystem: Why plugins can't update on a Pantheon live environment

It is common in the WordPress community for live sites to automatically apply updates to plugins straight from the WordPress repository. This behavior provides some security benefits when updates are applied very quickly.

However, the changing of executable PHP code in a live environment by PHP depends on loose file permissions. At Pantheon, we only allow writes to the upload directory because we think it is an unacceptable security risk for an application to be able to rewrite its executable code in a live environment. A sizable amount of WordPress' security reputation comes from attacks that leverage this vector.

At the same time, for the majority of WordPress sites for whom the live environment is the only environment, this tradeoff is acceptable. However, professional teams managing mission-critical sites need more than one environment to ensure the security of their supply chain.

Updating plugins through a Git-backed deployment pipeline

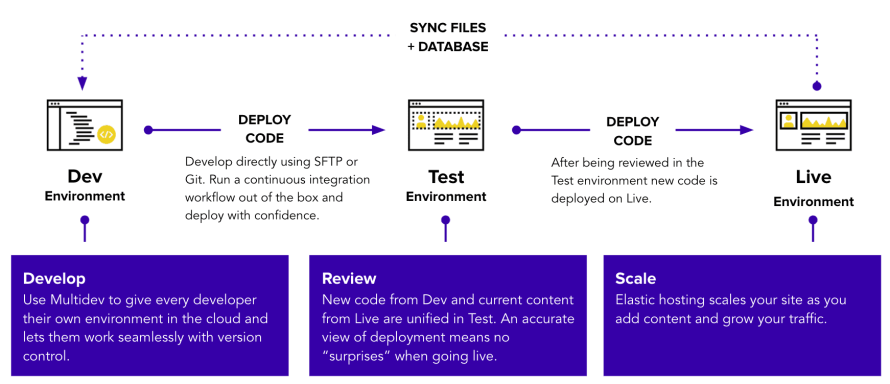

Instead of a CMS updating itself in a production environment, the code for a site on Pantheon flows through a Git-backed deployment pipeline. Every site on Pantheon includes at least three environments: Dev, Test, Live.

Image

Both Test and Live lock down file permissions. Dev environments can toggle between this "Git mode" (where only Git pushes can change PHP code) and an "SFTP mode." This second mode makes the PHP files in Dev environments writable such that code changes can be uploaded from developers' laptops, applied through WP-CLI, or WordPress itself.

Applying updates quickly and confidently with visual regression testing

Our fundamental security stance on the file permissions of live environments does not change the fact that teams still want to get code updates out fast. That's why Pantheon supplies Autopilot to keep the software supply chain moving.

Image

This feature regularly checks for updates to plugin and core and applies them to a Multidev environment (Multidev environments are like our Dev environments, and they are made per Git branch). Autopilot takes screenshots before and after applying updates and compares them (Visual Regression Testing). When no pixels move after Autopilot updates a plugin in a Multidev environment, that's a strong indication that the plugin update is safe to merge to the Dev environment and then deploy to Test or Live.

Upstreams: How we supply updates to core and must-use plugins

While it is the norm in the WordPress community for most plugin updates to come from the WordPress plugin repository, that website is not the only distribution mechanism. Premium themes requiring a paid license usually get distributed through their own channels. Some teams prefer to pull plugin updates straight from GitHub as mentioned above.

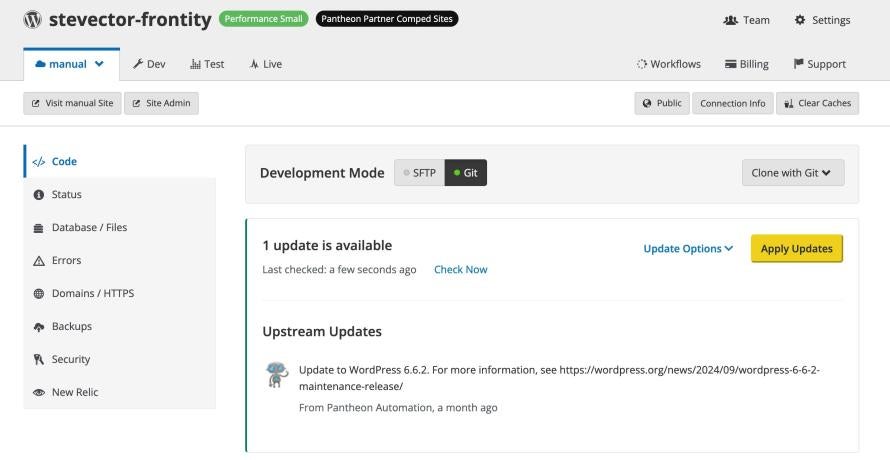

For core itself, Pantheon has always supplied updates to customers through copies of core on GitHub. Those updates then appear as one-click updates in the dashboard.

Image

Similarly, our WordPress repo adds a wp-config-pantheon.php file so that our automatic configuration of database credentials is isolated from the changes teams commonly make to wp-config.php. We also supply small enhancements like a "must-use" plugin that ensures some details of compatibility with our hosting environment.

The separate step between a new version of WordPress coming out on WordPress.org and that update merging into our repo for consumption by sites on Pantheon is mostly a minor implementation detail. We like using Git in our workflows. Yet, that separate step also gives us a clear place where we can do any final checks of a core update before it shows up in the dashboard for our customers.

Composer: An increasingly appealing option

It has been over a decade since Pantheon architected that Git-centric distribution mechanism of core updates. And that model still works well for most of the WordPress sites on Pantheon.

Still, for teams pushing the boundaries of WordPress development, there is a newer method in this space: Composer. ("New" is a relative term. We've shown WordPress + Composer examples going back at least seven years.)

The concepts and mechanisms of Composer are very similar to the more ubiquitous npm tooling in the JavaScript community. Composer allows developers on a project to list specifically the dependencies for their site/application. In composer.json you list the "packages" you need like individual plugins, WordPress core, themes, and maybe even PHP packages that are not at all WordPress-specific.

Through the lens of inspecting the security of your software supply chain, Composer can make things more comprehensible by:

- Listing in the relatively short composer.json file all the places Composer should look for software updates (like WPackagist, which wraps the WordPress repository, or packagist.org, which is the default for the PHP world, or even individual GitHub repositories).

- The much more verbose composer.lock file lists exactly the versions of which packages are to be downloaded and from where. This file includes details like hashes to reduce or eliminate the possibility of a package being “poisoned” or altered.

At Pantheon, we have even found that Composer enables us to update our recommendations more efficiently. For instance, we recently started including licenses to Object Cache Pro. This effectively deprecates the need for the wp-redis plugin we wrote many years ago. With Composer that switch is pretty simple. But had we included wp-redis as a must-use plugin that would have significantly complicated our updated guidance. Simplifying complexity is one of Pantheon's guiding lights.

Simplifying security by reusing the best available tools

While there are lots of reasons to use Composer (in a recent "Elevator Pitch" Chris Reynolds highlighted the avoidance of errors), the one that stands out to me is the way it simplifies the question "How does this file/directory get updated?" In both the WordPress and Drupal communities, I have struggled with the fact that the processes and rules are different for:

- The custom code I wrote specific to this site

- The custom code I wrote that I'm trying to share across projects

- Free plugins/modules

- Premium plugins

- Core

- The custom theme for a site

- The files like robots.txt that come with core but are commonly modified per site

That list is too long, and in practice, it is probably longer. Composer prompts teams to boil the distinction down to:

- Code written specifically for this project that is version-controlled directly in the repo.

- Code downloaded from somewhere else.

That simplification provides great benefit to understanding the security of a site. It is much easier to see how a codebase might change over time because the provenance of plugins is more visible at the surface of the composer.json file than when you're stuck looking through "git log."

That simplification also has a homogenizing effect on the PHP ecosystem. More projects start to look the same. Seemingly huge decisions like the choice between WordPress and Drupal (and CraftCMS and more) get abstracted from the root of your repository. The CMS is not the entirety of your project; it is one of many packages that your project depends on.

Such a change can be unsettling for teams accustomed to working in CMS-specific ways. Even the adoption of Composer in the Drupal community required displacing the "drush make" setting tooling. Drush Make did pretty much the same thing as Composer but in a Drupal-specific way. That's not a net benefit for security, stability and speed.

Teams and web ecosystems generally find peace of mind when they replace custom-built solutions with something broader, more mature and more widely supported.

After all, that's the dynamic that leads nearly all developers to drop their custom CMSes in the first place in favor of WordPress or Drupal.