"Because It's Faster, Of Course" Introducing LCache

Any website that wants to provide a speedy experience to logged-in users (e.g. content editors, forum participants) or even logged-out users who need a unique page (online shoppers, viewers seeing personalized content, etc) needs an application object cache. These caches store built-up data objects (e.g. a user, a post, a node) between requests so that there’s less work for the CMS to do to serve a page that needs one of those objects in the future. They’re crucial.

Memcached was the original solution, and soon after Redis followed, using the same basic model but with a more featureful interface. Pantheon has provided Redis as a part of the platform for years, and in truth both solutions are quite good. But they both also have serious drawbacks.

First off, Redis and Memcached are both network-attached resources, and with enough cache data being sent back and forth, the network can become a bottleneck. Likewise, if a webpage requires a large number of requests, performance may suffer even if the amount of data is small; each cache fetch still has to do a complete round trip, and those milliseconds add up fast. Lastly, even though there should be no critical data in a cache layer, cache availability is mission-critical for busy sites, and neither Memcached or Redis have amazingly awesome options for high availability.

Moving Ahead: LCache

My colleague David Strauss has been speaking about these problems for over a year now, and this summer we finally decided the time was right to do something about it; LCache was born. He unveiled the approach a few weeks ago at DrupalCon Dublin:

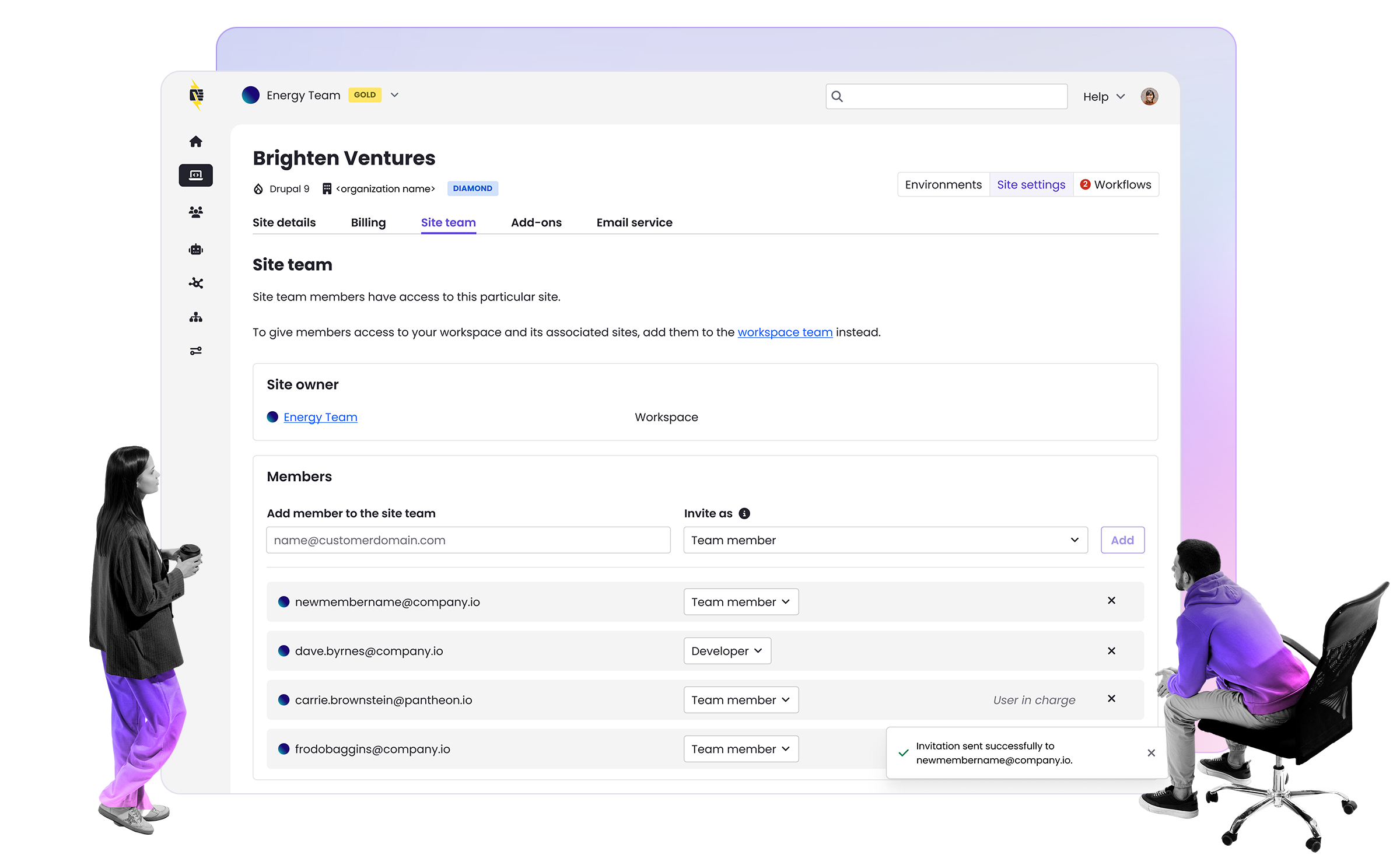

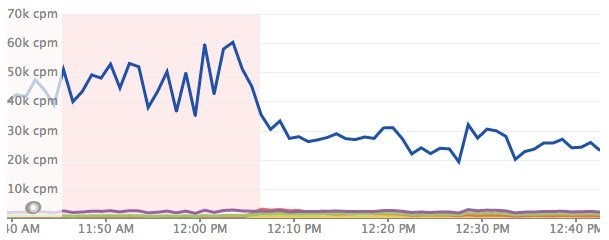

The theory behind LCache is simple. Most sites have a power-law distribution of how often cached objects are loaded: e.g. Drupal 7 loads all the values for variable_get() with every request; same deal with “alloptions” in WordPress. Taking a page from modern multicore CPU architecture, we establish multiple layers of cache so that frequently accessed items can be co-located with the application runtime, not over a network. With hot cache items stored locally in the “L1” cache, we can significantly improve the performance of sites, while also making them more stable:

Image

Not only does total query volume come down, but it also becomes more stable. These are both Good Things(™). The challenge to layering the cache is of course coherency. Luckily, we’ve learned a lot about this within Pantheon in building our Valhalla filesystem—which uses a similar layered cache approach to provide the best possible consistency and speed for network attached file storage.

LCache combines well established architectural patterns from modern CPU architecture, efficient data propagation from mysql’s row-level replication, and our own hard won experience on Valhalla to provide a true quantum leap in website object caching. Combined with PHP7, it’s a huge upgrade in terms of performance for complex websites.

Composer Library and Alpha CMS Implementations Available

LCache’s core logic is built as an independent Composer library, with CMS compatibility layered on top. LCache doesn’t require Pantheon—you’re free to run it on your own infrastructure so long as your PHP build is modern and meets the requirements. CMS implementations are available for both Drupal 7 and Drupal 8, and there’s a WordPress plugin too—thanks to the efforts of Daniel Bachhuber, who also inspired the pithy but brilliant tagline: “Because it’s faster, of course.”

Right now it is still early days. Currently the modules and plugin are considered Alpha-level stability. And there are exciting new developments still in progress, including administrative statistics visibility, better cache tuning options, and even a lightweight machine learning capability that can “autotune” the cache behavior, preventing suboptimal website code from abusing the cache layer.

However, we have helped several cache-heavy customers go live with LCache, leaving Redis in their rearview mirror with good results. If you’re interested in being an early adopter, you should reach out to Sales or Support. Otherwise, simply stay tuned as we continue to work towards an official 1.0 release.